-

DeepAccident

A Motion and Accident Prediction Benchmark for V2X Autonomous Driving

About DeepAccident (Paper link)

DeepAccident is the first V2X (vehicle-to-everything simulation) autonomous driving dataset that contains diverse collision accidents that commonly occur in real-world driving scenarios. It is developed by HKU MMLab and Huawei Noah's Ark Lab. This proposed dataset intensively focuses on autonomous driving safety, which has led to the proposal of a new task called End-to-End Motion and Accident Prediction and can serve as direct metrics for evaluating the safety of autonomous driving algorithms. Note that the direct safety evaluation for autonomous driving is not possible with the existing real-world datasets since they only contain normal and safe scenarios. In addition, other simulator-based datasets do not focus on evaluating the safety of autonomous driving algorithms and only contain little or zero accident scenarios. The accident scenarios in DeepAccident are designed and synthesized via a realistic simulator - CARLA, under different weather and light conditions to provide more diversity.

DeepAccident provides: (1) multi-modality sensor data including multi-view RGB cameras and LiDAR, (2) synchronized sensor recordings from four vehicles and one infrastruture for each scenario thus enabling V2X research, and (3) diverse ground truth labels that support both perception tasks (3D object detection & tracking and BEV semantic segmentation) and prediction tasks (motion and accident prediction).

Dataset Features

Accident Scenarios

Diverse accident scenarios across different places under various weather and lighting conditions.

Accident moment

Accident moment

Accident moment

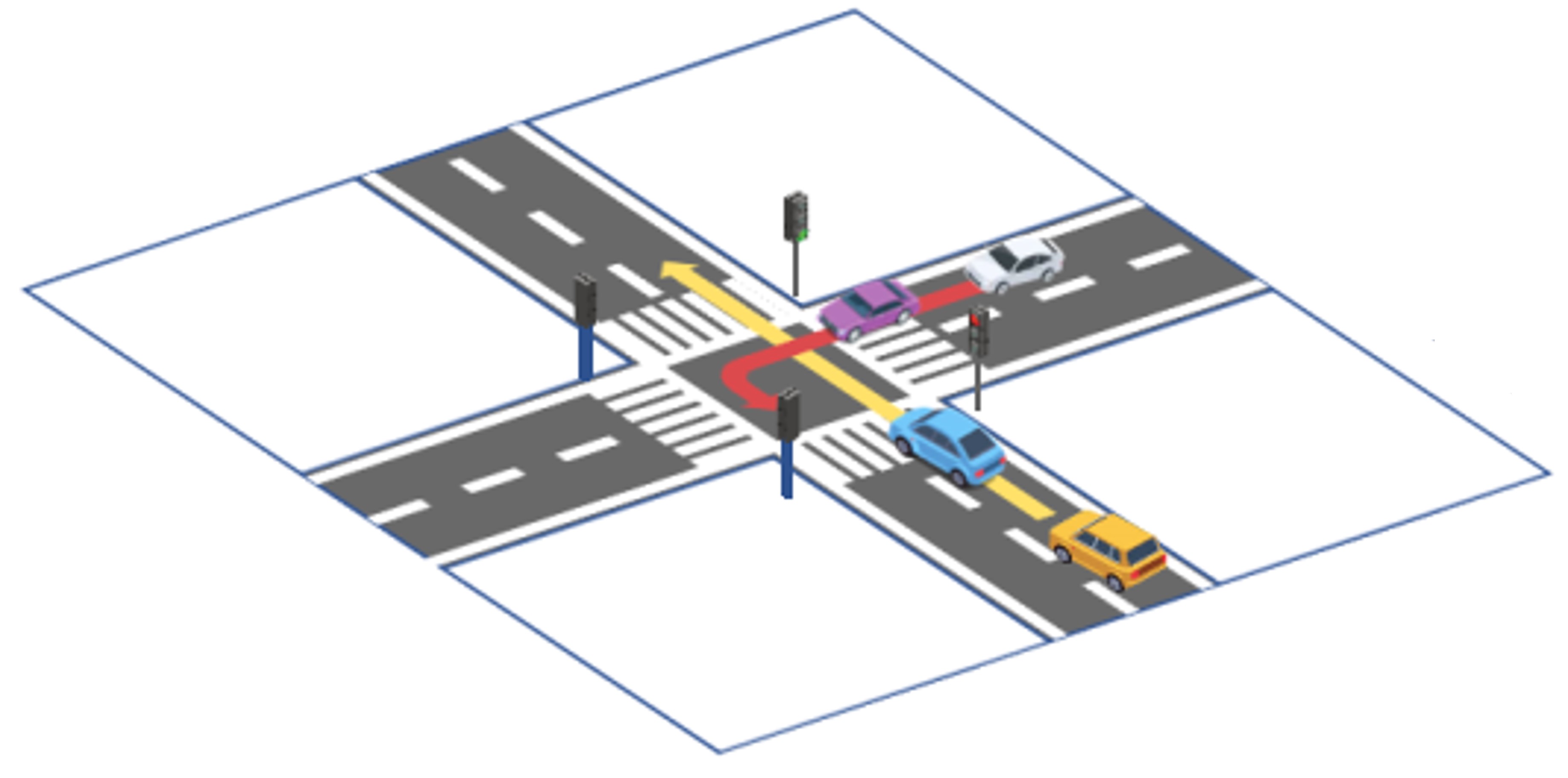

Multi-Agent Data

Synchronized data between four V2X vehicles and one V2X infrastructure to provide diverse viewpoints.

Collaborative autonomous driving

V2X settings of DeepAccident

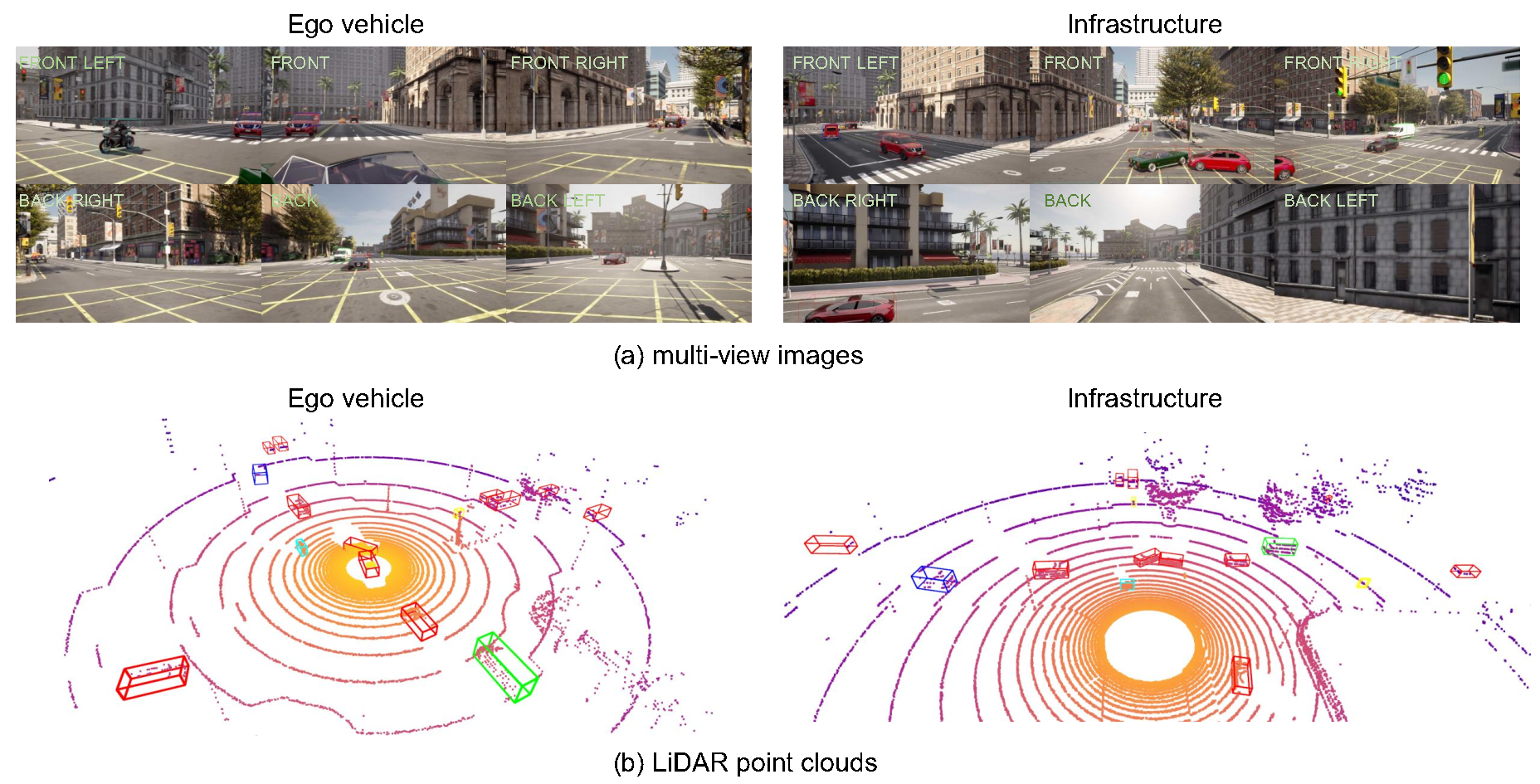

Multi-Modality Sensors

Multi-modality sensors including LiDAR and multi-view RGB cameras on each V2X agent.

LiDAR

Camera

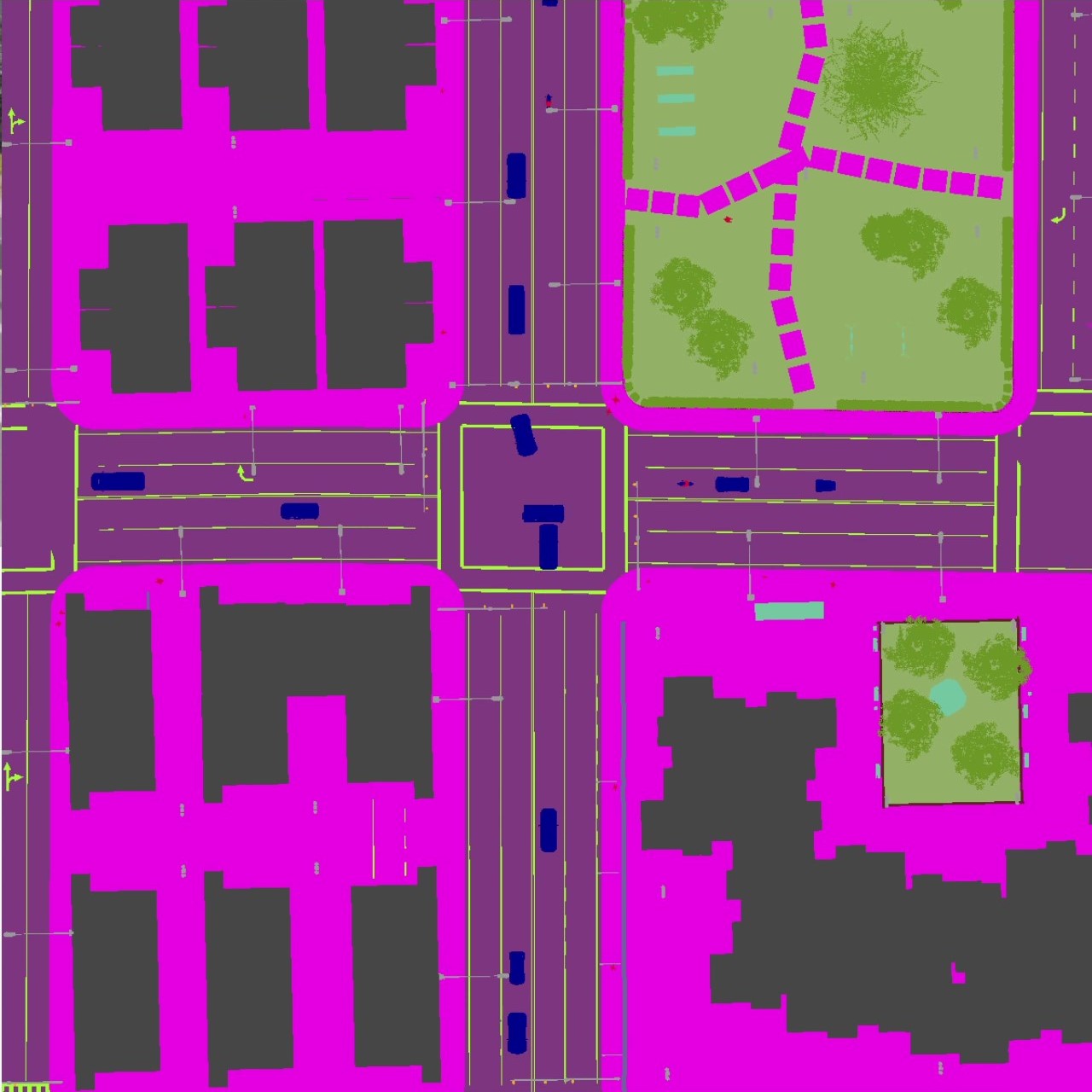

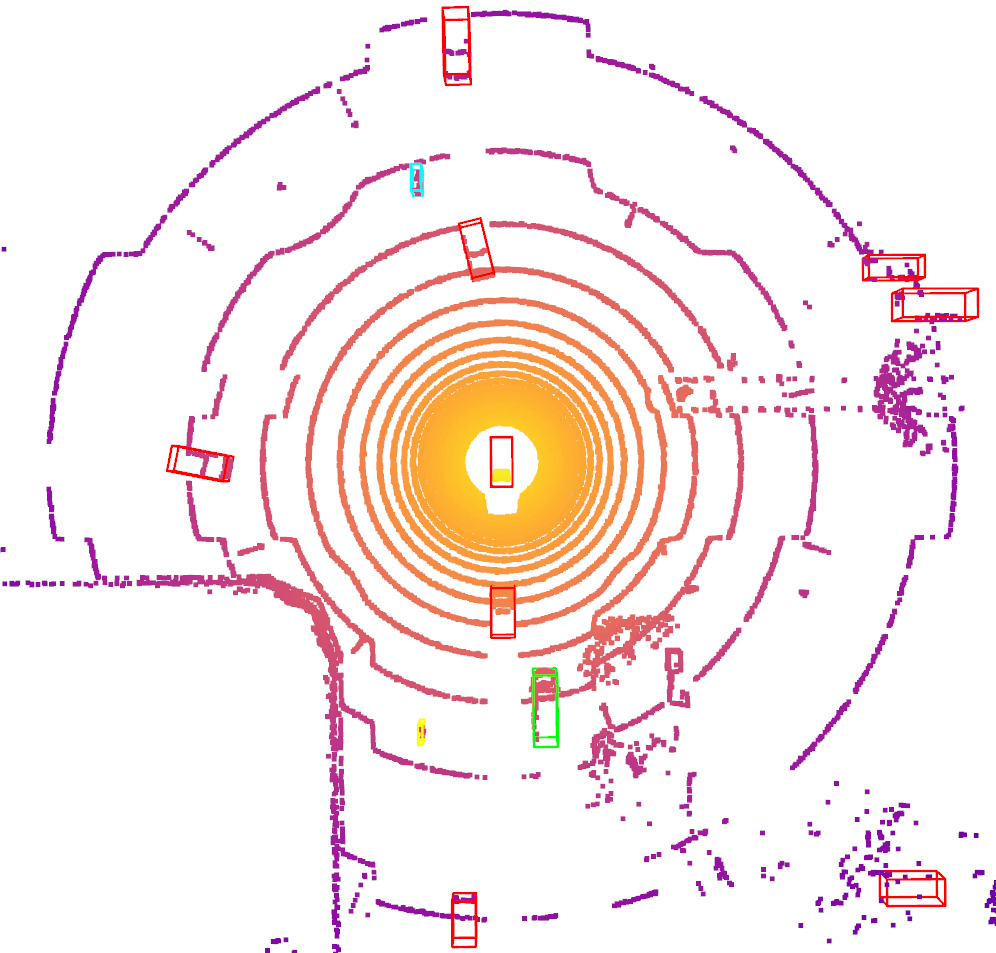

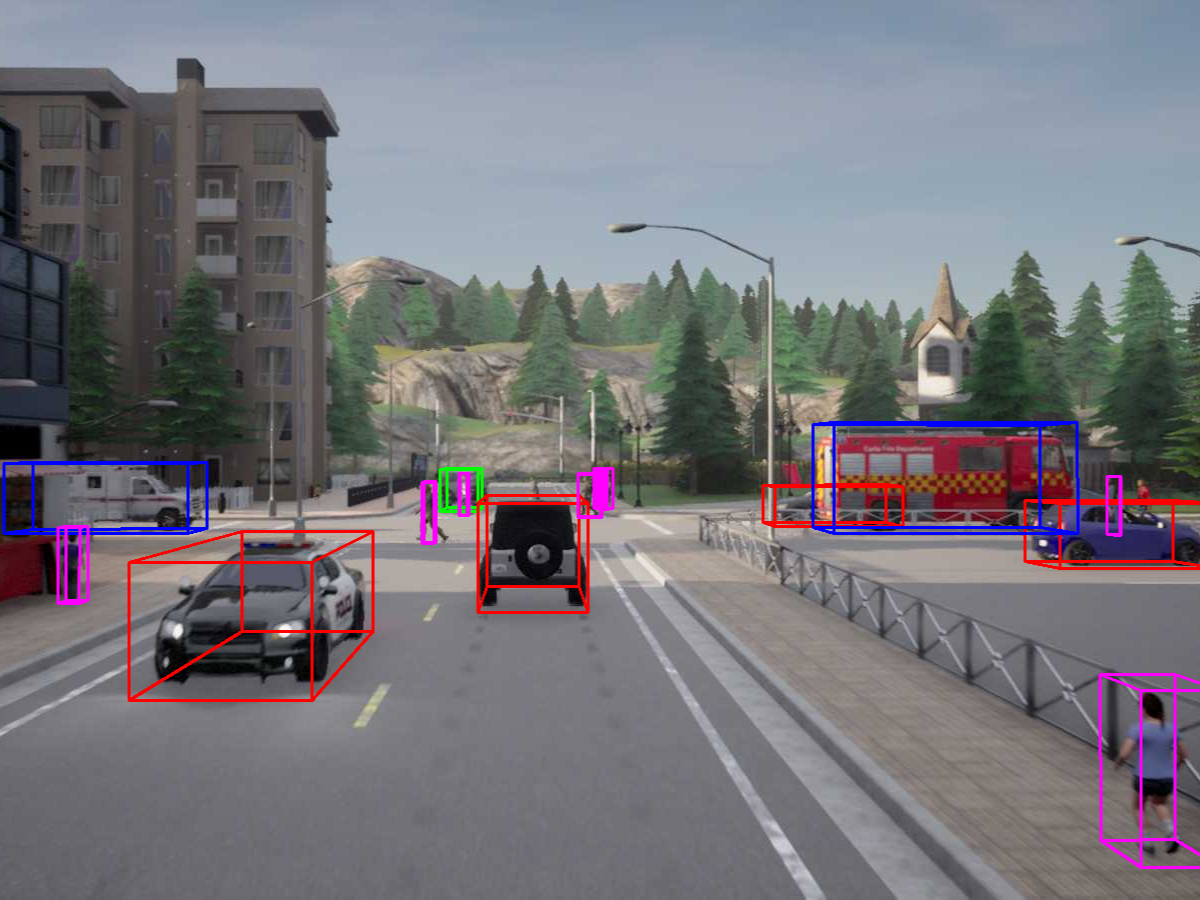

Diverse Annotations

Ground truth labels for motion and accident prediction, semantic segmentation, and 3D object detection and tracking

Motion & accident prediction

BEV semantic segmentation

Bounding box on LiDAR

Bounding box on image

Diverse Scenarios

Accident Scenario Visualization

BEV Semantic Segmentation

Multi-View Cameras

Motion and Accident Prediction Task

V2X Settings

- Two vehicles designed to collide with each other

- Two vehicles followed behind the colliding vehicles.

- One infrastructure equipped with full set of sensors

V2X Settings

(for illustration, the cameras are installed behind vehicles)

Vehicle Side

Instructure Side

Multi-Modality Sensors

Citation

If you find the dataset helpful, please consider citing us.

@article{Wang_2023_DeepAccident,

title = {DeepAccident: A Motion and Accident Prediction Benchmark for V2X Autonomous Driving},

author = {Wang, Tianqi and Kim, Sukmin and Ji, Wenxuan and Xie, Enze and Ge, Chongjian and Chen, Junsong and Li, Zhenguo and Ping, Luo},

journal = {arXiv preprint arXiv:2304.01168},

year = {2023}

}